By Francesco Cipollone

Are you ready for another article filled with infosec goodies? If you missed the ISSA-LA conference, don’t you worry — we’ve got you covered with a summary of all the highlights.

The weather may not have lived up to expectations in LA — where the sun is ‘always’ shining — but one thing that never fails to impress is the ISSA-LA conference.

Please note this is the second part of two articles walking you through the conference, so you can make sure you’re part of it next year.

If you missed the first part, you can read it here: https://www.itspmagazine.com/itsp-chronicles/how-to-do-an-infosec-con-right-issa-la-summit-does-it-again-part-1

First things first, what should you expect from this article? Well, of course, more infosec, starting with social engineering, some sun here and there, and lots of running along the beachfront in Los Angeles.

Lisa is the founder and CEO of the Women's Society of Cyberjutsu and offered a really interesting take on human hacking. Basically highlighting the fact that people can’t help but be people, and that’s why we are all “hackable”.

So what is social engineering? https://en.wikipedia.org/wiki/Social_engineering_(security)

Put simply, or as simply as I can, it is the ability to gain access to a system and retrieve information by tricking humans into trusting you.

Lisa shared some really interesting stories; like how being a woman actually makes your life easier when it comes to social engineering.

One of the stories she shared was about how one day she managed to social engineer her way all the way into the server room of the main building. She was eventually caught, but she added a funny anecdote about how when she was escorted upstairs with the security officer she was left alone in the main building again!

Please don’t take the following statement wrong but it is a true fact.

This is quite a common story from women working in a male-dominated environment as they are able to use sweet talk and feminine wiles to get one over their male counterparts.

Another funny story Lisa shared was about a scam call she received and how the scammer tried to social engineer her. Little did they know the background she came from! Ultimately she got frustrated with the caller and hung up.

A good friend of mine, Stuart Peck, uses these kinds of calls as a fun exercise to waste a scammer's time and therefore prevent other people from getting scammed.

Lisa also shared the techniques applied during the social engineering exercise and how they have been used, to help us all be a little bit more aware of when we might be the target!

She also shared some really helpful advice on how we can all avoid getting scammed:

Use two-factor authentication (what you know and what you have). Why? Because it makes the life of the scammer harder as they would need to get ahold of your text messages or a second factor, which would probably be your phone.

Check grammar in the email — bad spelling indicates a possible scam.

If someone calls you and pretends to be a bank, always call the bank back or threaten to, usually this will make them extremely nervous.

Use a VPN and HTTPS to avoid getting sniffed out over the network

Last but not least: be sceptical, as infosec professionals, we are all a bit paranoid.

David Caissy — Why manual code review might be one of the best investments you could make

David Caissy’s passion is diving, and he made that very clear...as did his girlfriend’s rolling eyes when he started on the subject!

We also got a good dose of dad jokes to break the ice at the beginning of the talk.

This was actually one of the talks I enjoyed the most, probably because I found it to be the most applicable to what I’m doing with some of my clients.

The talk was really interesting and, as I’m approaching the problem of security analysis right now, it was really insightful.

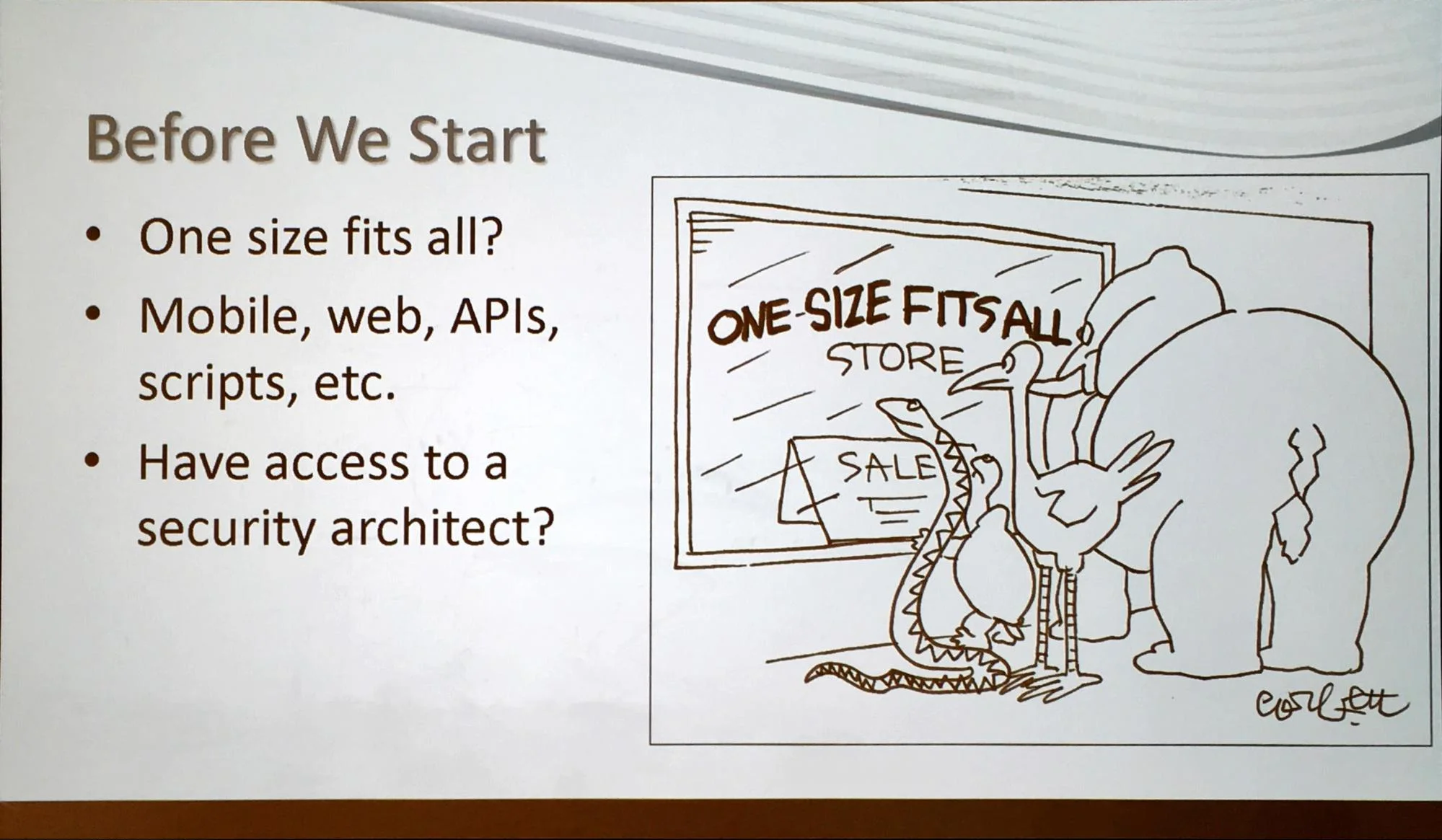

As well as covering several uses of Static Code Analysis (SAST) and Manual Code Review (MCR), David also gave us a view on how to have external reviewers, how to work with developers (DEV) and Security Architects for code and logic review and how to use the various tools (SAST and IDE corrector) in an incremental approach.

The part I found most useful was the collaborative approach explained by David and his view on how to incrementally approach an application being built.

This is an ongoing problem that security architects and cybersecurity folk have with DevOps or Agile teams.

Security architects — reviewing an application — need to have a full picture of the application and the logic; otherwise, a security review might get invalidated.

Fundamental changes in the logic or design of an application might require a further review of certain pieces, which is fully in line with Agile methodology.

I’m going to be a bit controversial here: but going fast on the delivery might not necessarily mean you will end up with the best use of the resource and time but you will be more flexible and adaptable to ever-changing business needs — the Agile Method and optimisation of resources do not always go hand in hand.

David also explained that there is not one single view and approach to the application code review and revealed that the approach must change depending on:

Size of the team

Level of security maturity of the teams

Interface (architect, developer, business analyst, etc…)

The best way to tackle this is by having different language terminology and register and adapt them depending on the level of experience of the individual you have in front of you. After all, there’s no point discussing code level details with a business analyst!

So, going back to code analysis what tools can the developer team leverage on?

IDE integrates tools that analyse the use of libraries and function while wiring the code, which is useful in finding small errors.

Static Code Analysis Tools (SAST) that go through the code and identify patterns of errors.

DevOps tools - e.g. OWASP for the identification of AppSec vulnerabilities e.g. ASVS

I’m just going to mention some of the other tools that provide a view of the application security life cycle that was missing from the talk (otherwise the talk would have been too complicated):

Dynamic Code Analysis Tools (DAST) which enable the execution of a number of tests towards the application but require learning and/or configuration of the tests

Interface Application Static Tools (IAST) to allow the monitoring and testing of various interfaces between the part of the code in an application.

The talk went on analysing the pros and cons of the various tools and how to make the best use of them.

IDE Security Integration tools integrate directly into the coding interface and enable the identification of errors while coding.

The verdict - Useful, but that IDE integration identifies only partial

Static Code Analysis Tools (SAST) that go through the code and identify patterns of errors.

The verdict - Useful but take them with a pinch of salt. These tools are notorious for generating false positives and being heavy on the integration with the pipeline (full scan vs incremental scan).

Ultimately, no tool is good or bad in absolute terms; it depends on how it is used and who manages them:

Developers can and should be able to use the SAST tools and tweak the inspection rules; nonetheless, this should be controlled to avoid gaming the system.

The report form SAST should not be the only security check as those tools miss the logical flaw of an application/business processes.

SAST tools generate false positives, but the result can identify:

Injection flaws

Deprecated libraries

Hardcoded passwords (unless they are encoded)

Other minor things

What SAST tools miss:

Logical application flaws

Configuration issues, if passwords are saved in plaintext on a Config file

Insecure use of the certificate or network connectivity

Security of third party or open-source libraries (TBV)

Authentication problems

Access control issues

Cryptography issues

Improper input validation

Output encoding

So at this point, you might ask yourself, if you have all those expensive toys to review my code, what does the human add?

I often get asked this question and the answer is:

The human can analyse the logical flaws and chain the vulnerabilities and exploits (read as pentesting) to achieve privilege escalation or trigger bugs.

David describes an incremental approach to the problem, like building a house you, as a housing inspector — yes that’s right you have just changed jobs! — review what’s available at the time of construction.

Phase 1 - The Foundation

What’s there

This phase is the very initial phase.

No proof of concept has been built

Some piece of logic is available (e.g. some of the application flows)

Frameworks and how the various components work together

What do you review:

Technologies and frameworks used

Logical flows between components

Database connectivity

The secure development environment and libraries used

Phase 2 - The Frame

What’s there:

Some piece of the code is developed (20% roughly)

Dev has created and hardened at least one class/module

Some logical flows are being developed

What you should check at this phase:

Input validation and field checks (if they are being enforced) - Refer to OWASP for best practices

Authentication and access control logic

Permission logic

Role-based access controls - roles of the users/privileged users identified

Authentication of modules and use of certificates (self-signed are fine at this phase, but the aim should be to use authorised certificates)

Exception handling and logging -

How does the application react when something fails?

Are there security user stories to test functionalities?

Security event logging

Are there user case studies for the logs

What should be logged?

Where should the log go?

Is the application generating security logs for the security exceptions?

Phase 3 - The Roof

What’s there:

Almost half of the application (40%) has been developed

One business flow is complete

Network comms has been set up

What you should check at this phase:

Logical errors/business rules bypass

Web servers and service security

Attack detection (e.g. buffer overflow)

Test specific pieces of the code and if the application is available, then test authentication flow and other permissions actions.

Verify the security events are being generated and collected

Phase 4 - The Detail and Refining

What’s there:

Almost all the application is complete (60%-70%)

Complex and critical business flows are being completed

At this stage, no drastic change of the application logic should happen

If any critical application logic is being developed, so late in the day, consider going back to one of the previous phases.

What you should check at this phase:

Misconfiguration and configuration files handling

Effective attack detection and alarms (SIEM/Log)

Consistent hardening of the application:

What error has been identified in other modules?

Are those errors being detected in other parts of the application (SAST can come to the rescue here)

Encryption in transit

Web servers and service security

Deployment management and how the code is being handled

Bringing it all together

The talk led to the conclusion that this incremental application review relies on code review in specific phases of the application.

The assumption of this incremental approach is that the application is developed organically, and no drastic code changes are being introduced.

The first part of the application development is dedicated to spot-checking and manual code review.

The second part focuses more on higher-level vulnerability checks and that leads to pentesting for specific applications, which usually depends on the criticality of the application.

So, you want to be a house inspector — I mean an application code reviewer — what do you need?

A developer background (you need to know how to build things before preaching how to build them right).

Some appsec testing experience to perform vulnerability tests.

Security controls and a safeguarding mindset.

To be able to combine a cybersecurity mind and a developer mind.

So in conclusion

Tools do not replace humans but could analyse the code.

A vulnerability scan in conjunction with static code analysis provides a powerful set of tools to act on.

Don’t review every line of code but leverage on developers to do their due diligence.

Identify the critical part of an application and business flows and provide the developers with what good looks like.

Have teams cross-check their work.

In between talks, a storm of officers went in and surrounded the main room — to secure access — and police officers manned the single entrance. I didn’t manage to take any photos though for fear of ending up on the wrong side of the law!

Jackie Lacey, LA County District Attorney, introduced the group and the work they have been doing for the past years with the Cyber Investigation teams.

After this introduction phase, Jackie handed the stage to Sean Hassett, who is currently Head Deputy District Attorney in charge of the Cyber Crime Division.

He introduced us to various fraud cases, which, while not directly related to cyber incidents all had a component of investigation and forensics for evidence gathering.

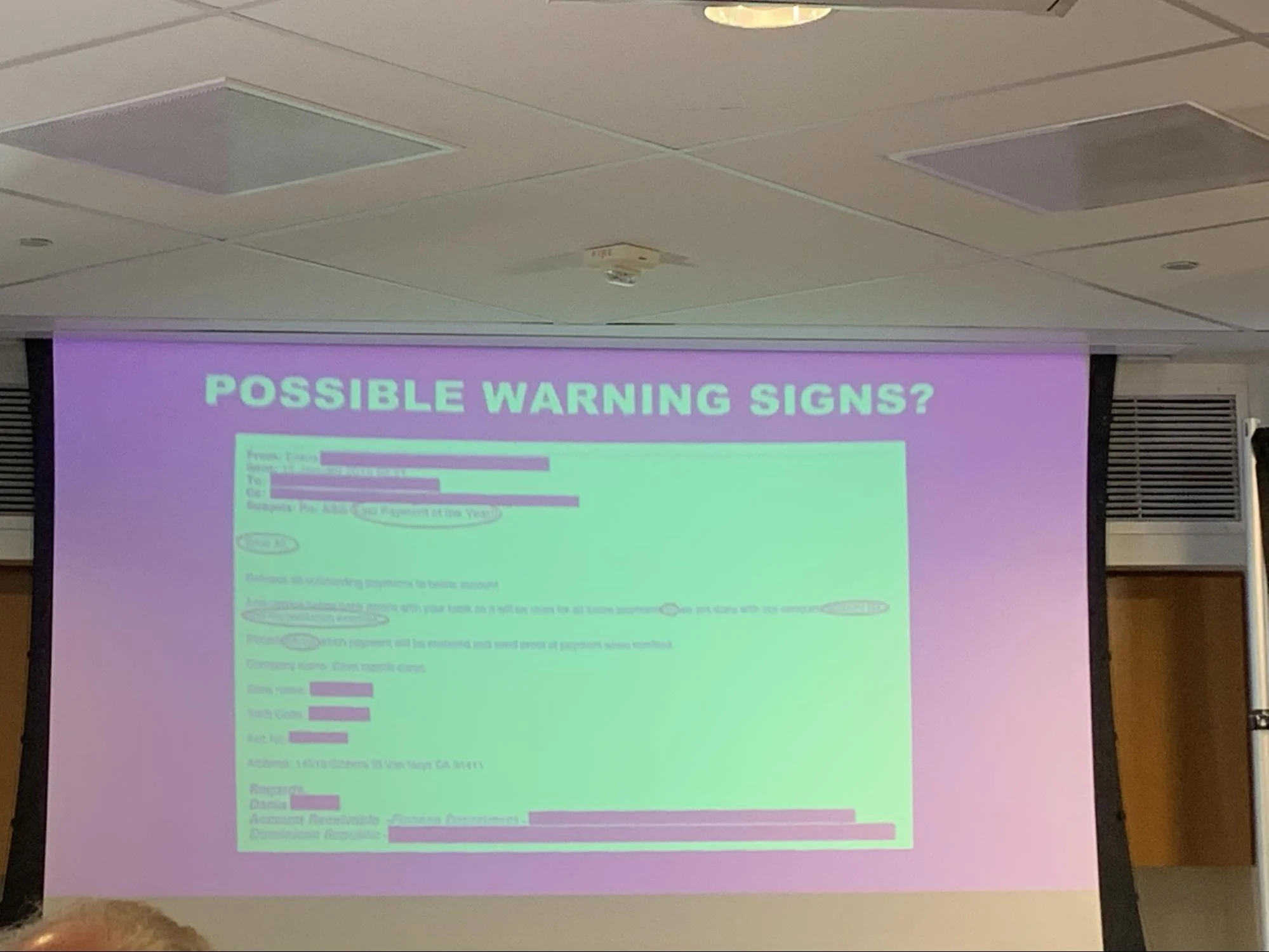

Some of the most compelling evidence came from email communication.

Some of those scams were perpetrated by well-known phishing and scam emails and Sean walked us through what to look for in an email:

Grammatical errors

Pushy emails (please complete this urgently)

Things which appear out of place

He also walked us through what the consequences were for each case. He proved crime does pay but it’s the county or country that benefits.

Sean continued with some cases of identity theft and one specific case where a fraudster kept on impersonating dentists.

Apparently, the fake dentist also had an office - the identity was really well thought through.

The individual created a number of fake identities, with fake accounts and was ultimately caught out with all the identity and social security numbers in his possession.

The law enforcement was able to retrieve the information by tracing back the IP addresses to one location and finally making the arrest.

The session on law enforcement was definitely informative, but I was hoping it would be more on the forensic side. Nonetheless, the whole session definitely gave us a flavour of what being at the end of law enforcement means.

Chenxi Wang — Fuzzing in the modern era

Chenxi has been part of the cybersecurity community for a long time and returned to ISSA-LA with a talk on fuzzing and the Google way.

Why is fuzzing not widespread?

Because it is not always easy to define and should be tailored to the specific application and organisation.

Google has got it right, but on the other hand they are a higher target for cybersecurity attacks; nonetheless, good practice should be to verify the solidity of an interface for 24 hours before releasing the code into production.

Some numbers from the giants:

Google has 28,000 coders for fuzzing tests

Cloudflare has developers and fuzzing engineers

Microsoft has 200,000 fuzzing tests for each artifact

Chenxi then continued analysing one common problem, libraries and packages from third parties.

It is nothing new that nowadays we use libraries from different providers and open source repositories but, as history has taught us, those are often used as attack vectors for targeted attacks (see British Airways hack, Komodo wallet app).

There are a lot of utilities being deployed and maturing in this world for third-party libraries malicious code detection. Nonetheless, those tools are in their infancy and should be coupled up with behavioural analytics when possible, so you can identify when an application goes rogue all of a sudden and starts behaving in a completely different way.

So how do you start deploying an appsec programme?

I am very close to this topic as I’m deploying a similar programme myself in a very big organisation, so the size and the changes have quite an impact...hence be careful and don’t aim too high

Start small and scale up

Provide a definition of success and define KPI’s so you can measure progress towards an objective

Identify the complexity and eliminate it, as complexity is the enemy of security

Do automation but apply it where it makes sense

Find activities and processes where humans add value

Progress in identifying what can be automated other than those processes

Consistent automation of process is better than aiming too high and failing

The talk concluded with some cases and examples of what good looks like. A case that was particularly interesting is the methodology applied by Google.

Google apps are in staging for at least 24-48h, the higher the criticality of the application, the more time it is spent in testing and fuzzing.

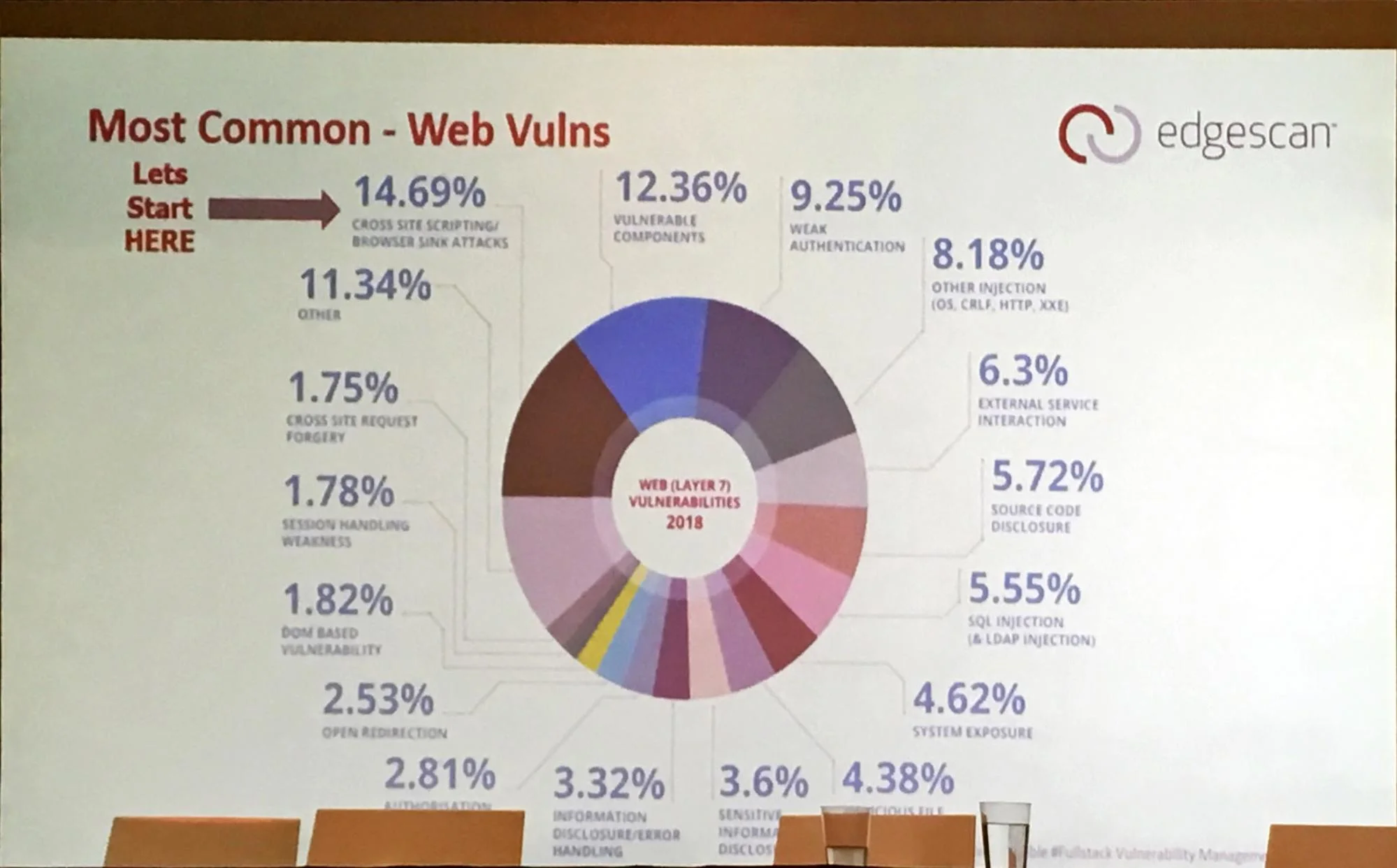

Rahim Jina — Edgescan results and statistics

After coming all the way from Ireland, Rahim was as disappointed as I was to find rain. Appreciating the weather jokes must be a very British thing.

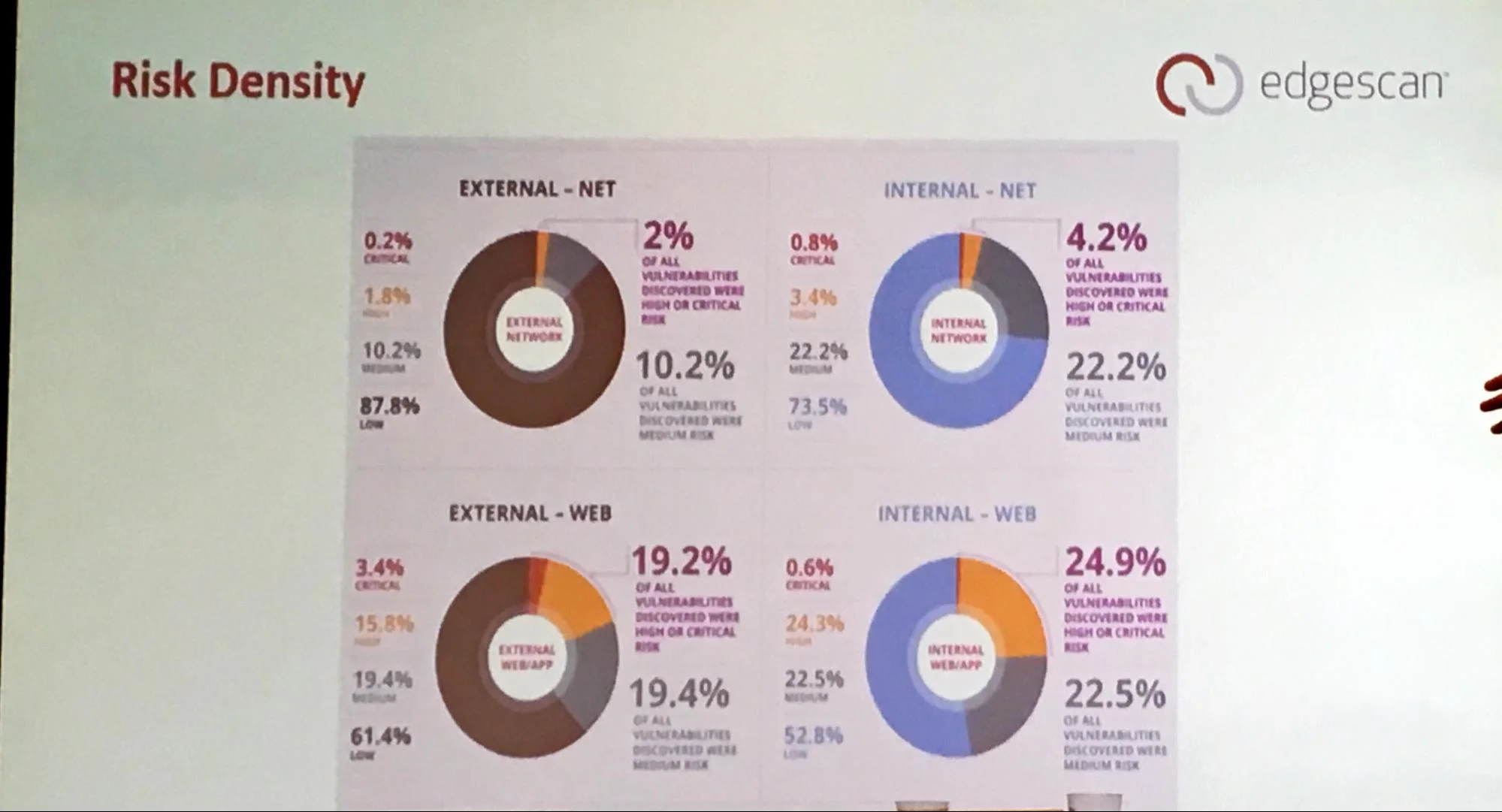

Rahim and Edgescan perform scans on a client’s infrastructure and the results have been presented at the conference for the past couple of years.

Although the data set is limited, it still provides a good insight into the nature of the vulnerabilities found and what we keep doing wrong.

The vulnerabilities network are still where the majority of vulnerabilities come from. This highlights the fact that we are still doing the basic things wrong and not strengthening the infrastructure as a whole.

The risk distribution and focus remain on the extranet; or applications exposed externally.

We remain focused on the external issues relying on the firewalls as a false sense of protection — insider threat and social engineering anyone?

The most common web vulnerabilities:

Cross-site scripting remains the winner

Closely followed by third-party vulnerabilities

And weak or broken authentication

This confirms the OWASP top 10 findings in 2017.

On the infrastructure vulnerabilities, the update to less vulnerable TLS seems to be a struggle, and I can confirm this as I have seen it live during most of my adventures in infosec.

The SMB and ancient protocols remain a close follower to the TLS issues.

The most common vulnerabilities paint a picture of things that could have been fixed with patches.

More importantly, some of the vulnerabilities detected date back to 2003, which is really low hanging fruit for attackers. Nowadays, there are also tools like https://www.shodan.io to immediately detect if an IP address has open vulnerabilities.

Just as a confirmation, Rahim exposed his wall of sheep/shame (DEF CON anyone) with services exposed over the web that shouldn't be, especially with vulnerabilities lurking around those services.

The previous analysis is summarised in the wall of shame and shamefully you can see how long it took to fix.

With vulnerabilities being exploited from time of the announcement to exploitation in nearly three days, the 60-70 days revealed here really is a risky position to be.

Application and network vulnerabilities are at the same fixing time but they really shouldn't be.

Infrastructure and network vulnerabilities tend to be fixable with less impact and a quicker turnaround, while application fixes require more time, especially if the application is internally developed.

Rahim concluded with a focus that 0-days are mostly scary for big targets, but the majority of organisations could avoid falling victim to cybersecurity attacks by just doing the basic things right, such as applying patches with a quick turnaround.

Travis McPeak returned to the beach with Netflix's pizza, which was a repeat of a previous talk at AppSec Cali and a step further than the presentation William Bengtson gave at Black Hat 2018 on credential compromise detection.

There was no Wiliam though on this occasion as he moves closer to his move to Capital One.

The talk leveraged the pizza analogy, using a different ingredient for each layer of security. The presentation was a good one, but this time it was missing the banter and the exchange between Travis and William.

Another interesting topic that was raised was the temporary key issued to DEV and the privilege, sometimes higher, but with access control.

Developers will always want full control and access to resources so in order to address this issue, Netflix almost manage to get one-time access on AWS the on-time access that Azure is working on with security centre.

The other layer added on top of the security pizza is the collection and reduction of roles and permission one virtual machine (VM) has.

Last, but not least, the level of monitoring and alerting Netflix does is terrific. Rarely I’ve seen an organisation that knows their infrastructure to the degree where they can detect so carefully when something deviates from the norm.

Aside from the structure of the talk I’ve been amazed by the level of sharing and giving back to the community that Netflix does.

Conclusions

This concludes the second and final part of the article. The conference has been amazing, and I’m looking forward to meeting up with the infosec community in Los Angeles again next year.

The weather was a bit iffy at the beginning but the location is still amazing and the logistics are impeccable. I am just wondering what will happen if the conference becomes more popular because I think it really benefits right now from the nice and familiar feeling of a small conference.

Location-wise, Santa Monica is always a nice place for a run on the beach in the morning or evening — or even both :-) — and there is so much to explore, both on the sandy beaches and downtown L.A. with speakeasy bars and art districts.

I also managed to take a small break and enjoy John Wick 3 in one of the newest cinemas in Santa Monica (in the mall).

The bar exploring didn’t disappoint either, with some unique gems here and there. Like the adult-only cocktail bar (a bar modelled in an old-style adult video store) and a throwback at ‘no-vacancy’ cocktail bar behind an old school with vintage books as bar menus.

Next year I will be going over to the conference with my famous cloud speech: Is the Cloud Secure? It is if you do it right, as well as my new talk: Security Architecture Slayers of Dragons and Defender of the Realms and I can’t wait.

About Francesco Cipollone

Cybersecurity Cloud Expert, Head of Security Architecture HSBC & virtual CISO Elexon

Francesco is an accomplished, motivated and versatile Security Professional with several years of experience in the Cyber security landscape. He helps organizations achieve strategic security goals with a driven and pragmatic approach.

Francesco is the Director of Events for the cloud security alliance, active public speaker and writer on Medium. Francesco is the founder of the cybersecurity consultancy in London: NSC42 Ltd and previously co-founder of Technet SrL.

Find Francesco on Twitter {@FrankSEC42} and LinkedIn.